See Salesforce Backup & Recovery in Action

Watch this quick demo to see how we make it easy to back up and restore your Salesforce data — fast. From automated, near real-time backups to point-and-click restores, our platform gives you full control and visibility, all in one simple interface.

Why is on-prem Salesforce backup and recovery beneficial?

All-In-One Solution for Salesforce

Granular Control

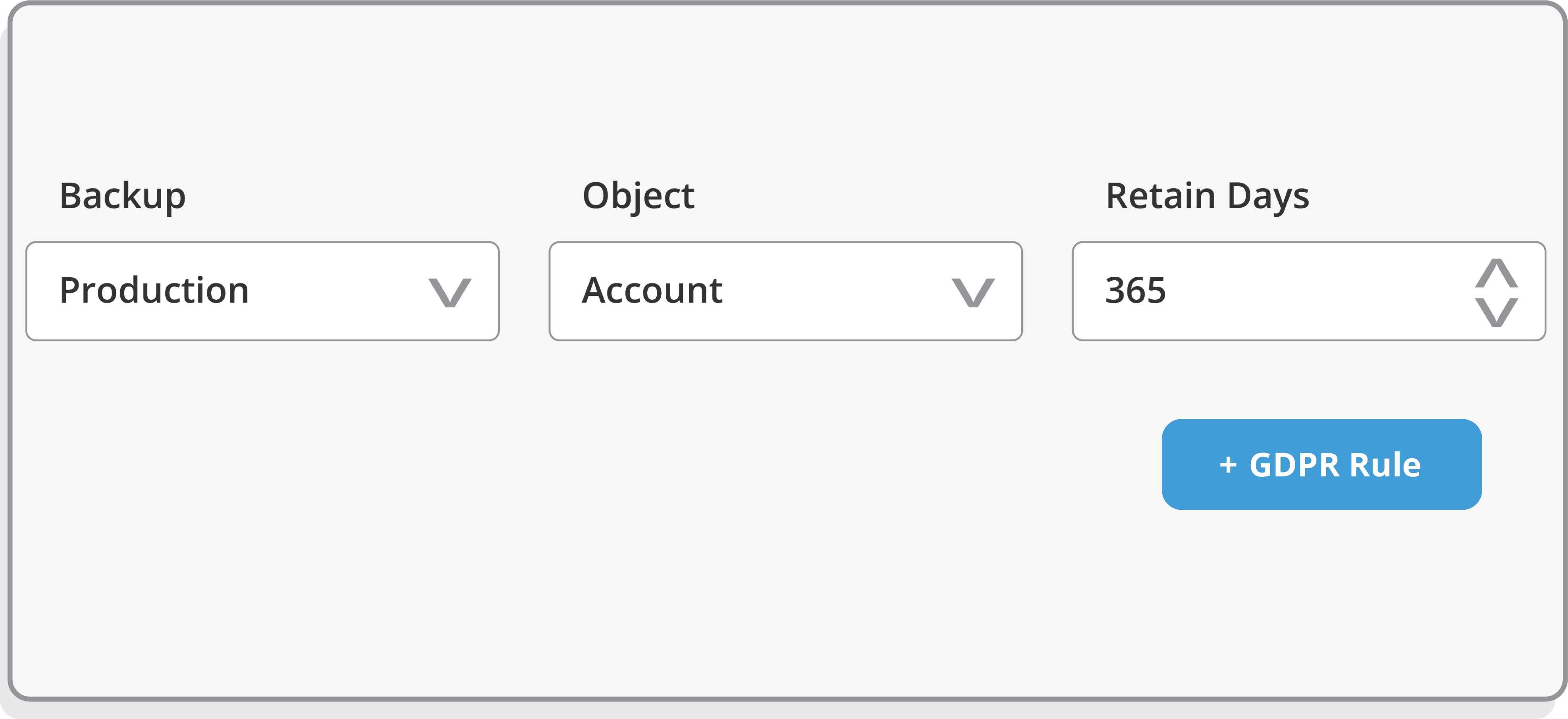

Select only the components that you need, maintainng control over the process and minmizing unnecessary data transfers.

Data Replication

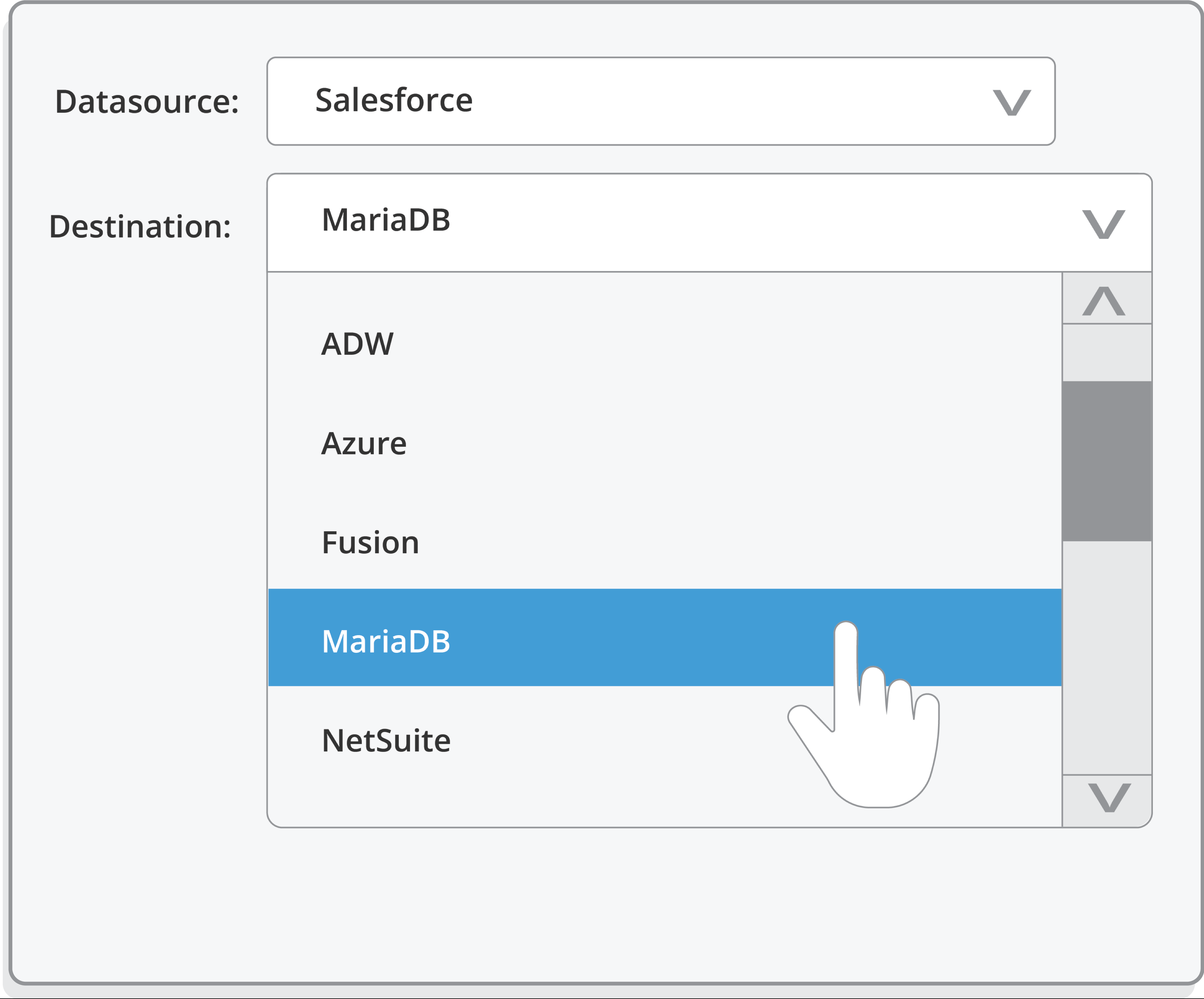

Deliver a full copy of your data in near real-time for accelerated reporting and analytics.

Bidirectional Integration

Data integration and synchronization that scales as your data grows – capturing information that is changing in the event of a restore.

On-Demand Recovery

Faster recovery of your content document, attachments, custom objects, related records, and more.

Salesforce Cloud Specs

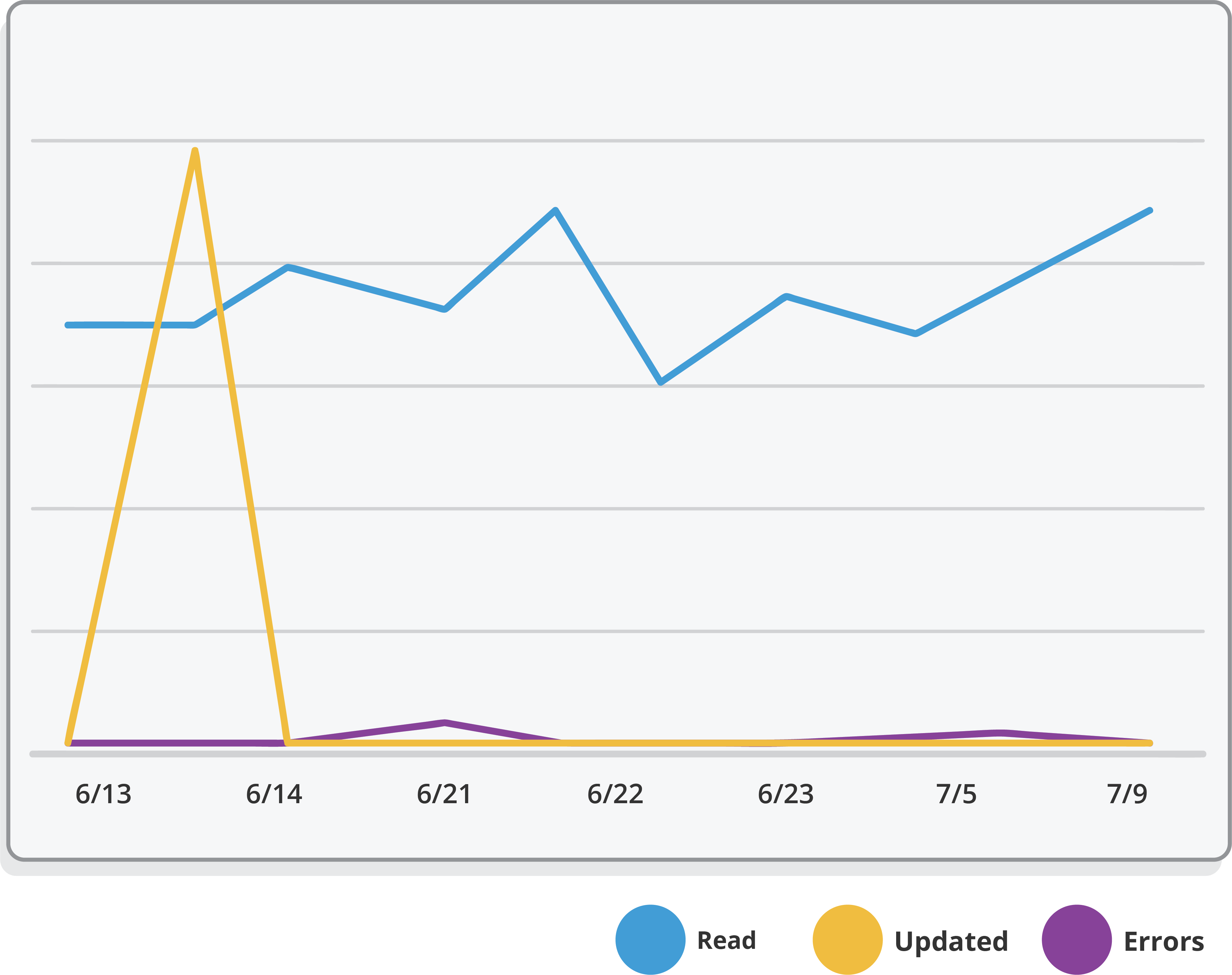

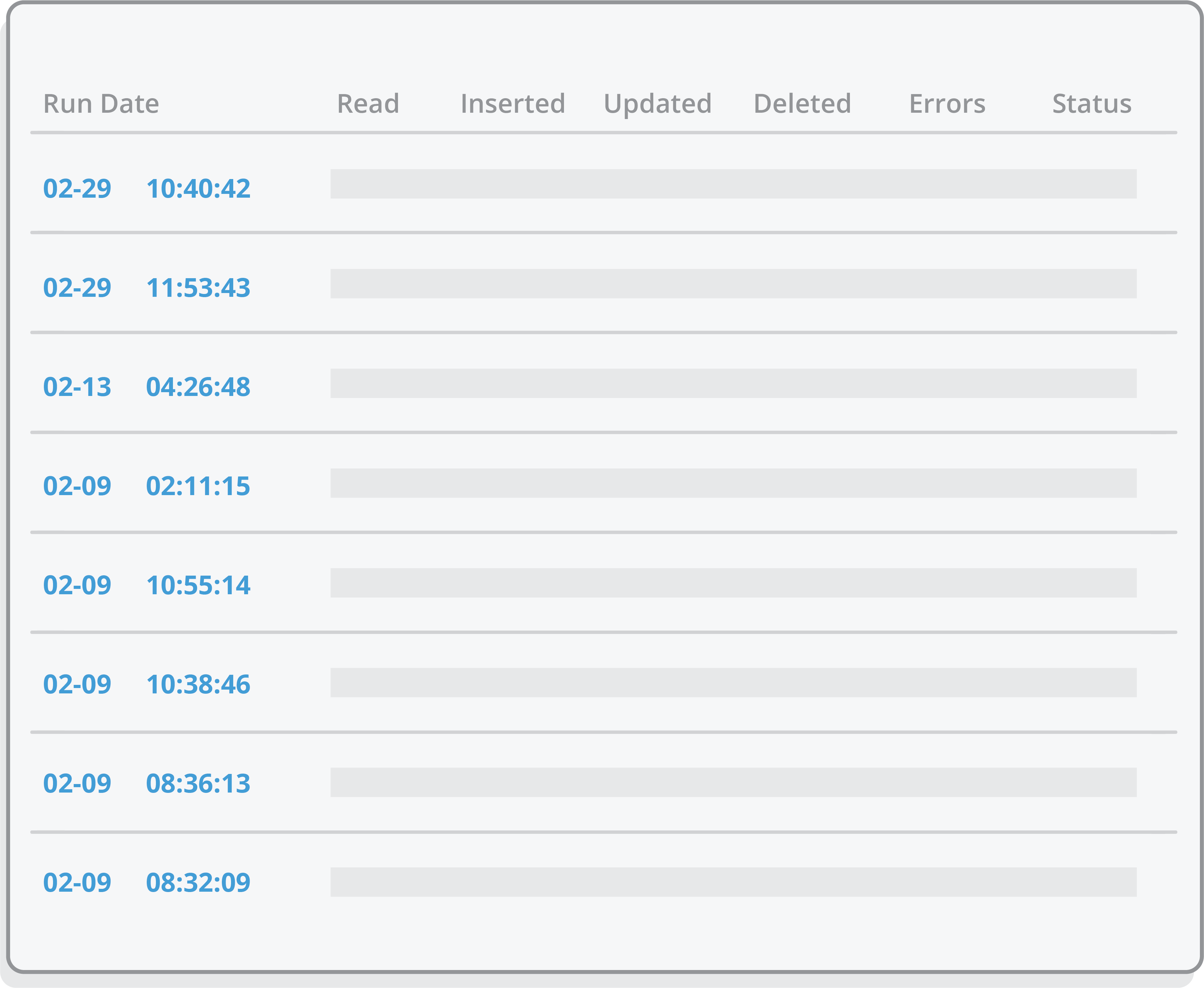

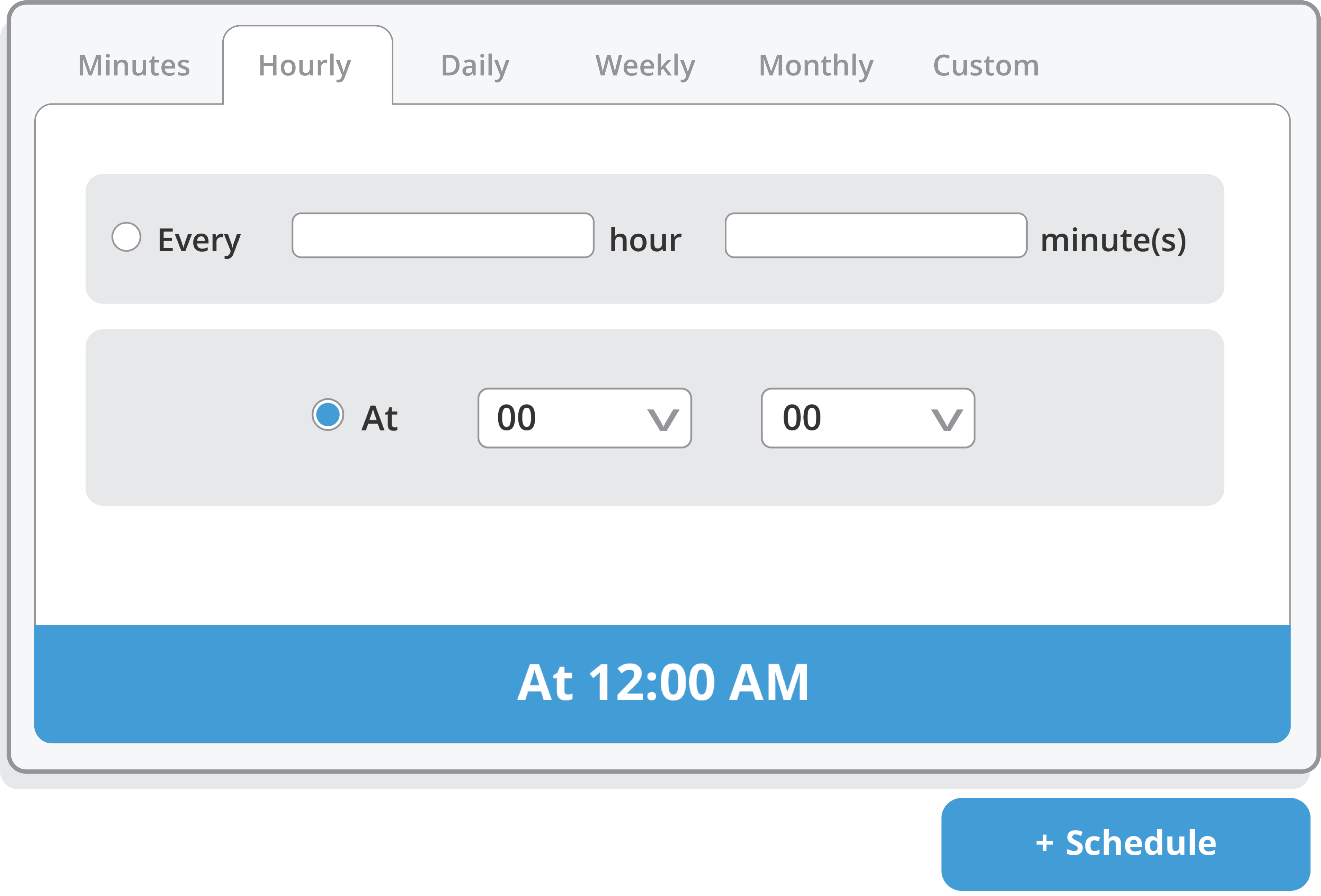

Frequency

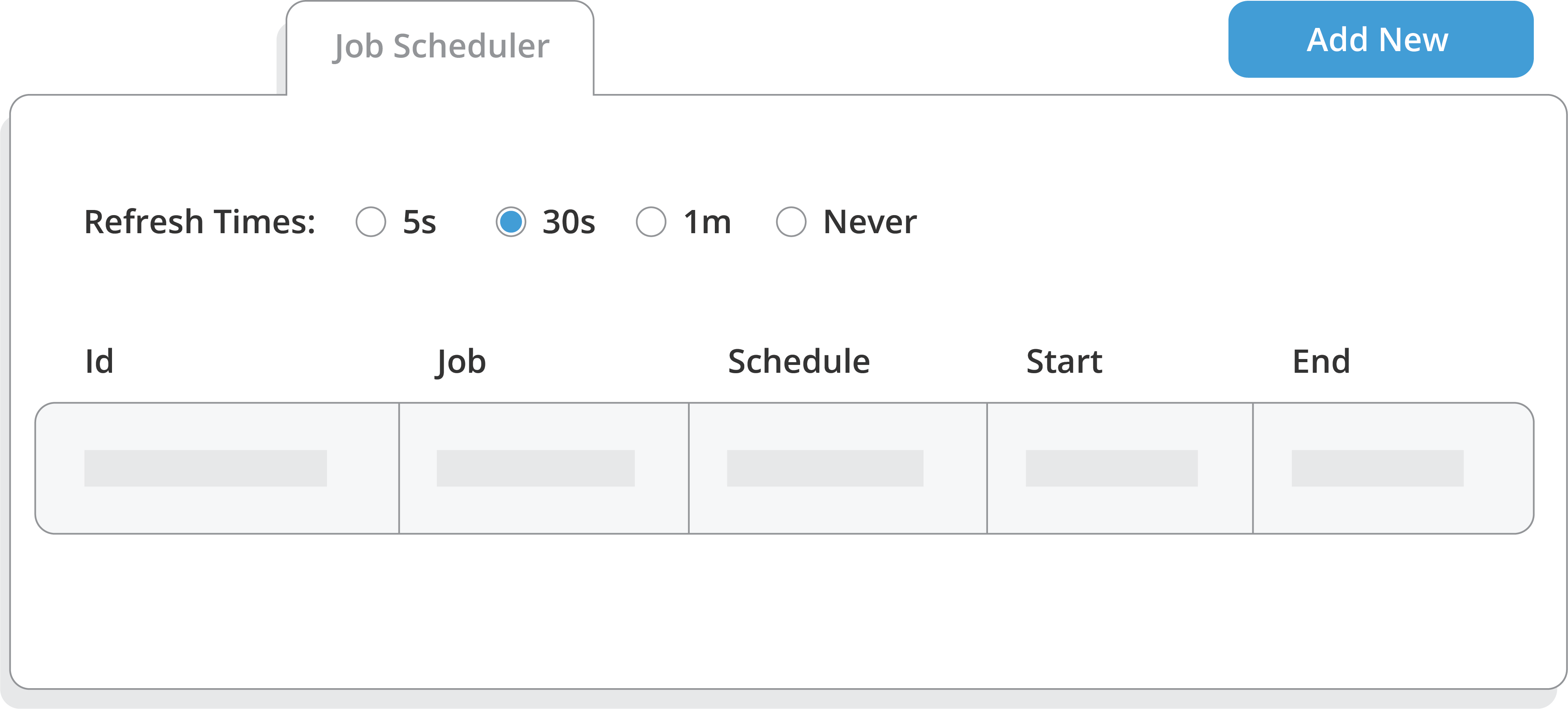

Near real-time bidirectional replication

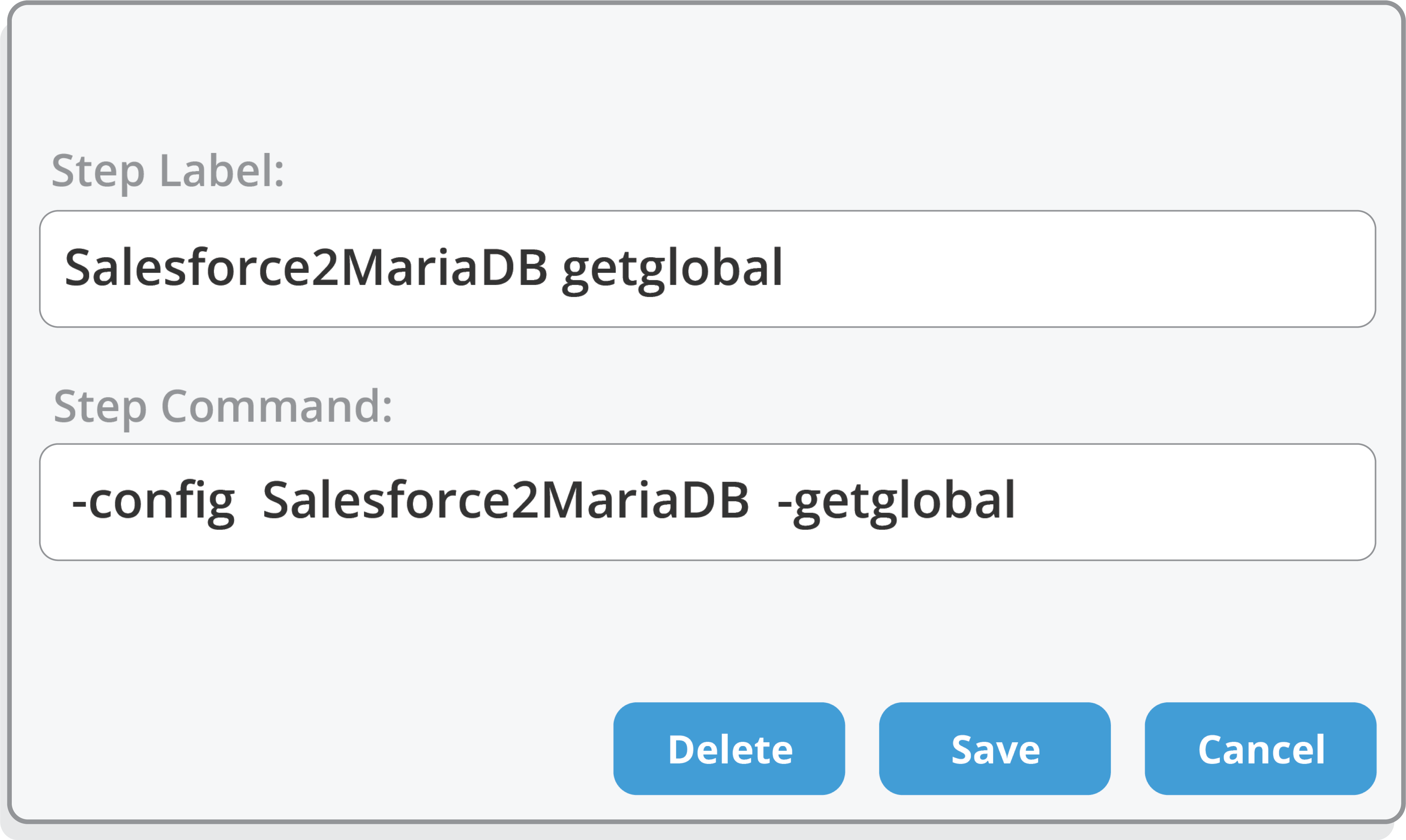

Schedule jobs at user-defined intervals

Deployment Model

Customer-owned and managed environment

Deploy on-premise or bring your own cloud infrastructure (AWS, Google, Azure, OCI, etc.)

Data Types

Standard objects & relationships

Custom objects & relationships

Files & attachments

Content documents & versions

Chatter feeds

Knowledge articles

Person accounts

History objects

Formula fields

Filtering

Object type

Hierarchy / Relationships

SOQL Query

Recovery

On-demand deleted record recovery with relationships

Point-in-time data recovery

Other

History tracking

Data versioning

Reporting and analytics database population for BI tools

Relying on Salesforce to Recover Your Data?

Salesforce’s native recovery costs $10,000 USD per recovery.

- The recovery process can take up to six to eight weeks to complete

- Data deleted more than three months ago is not recoverable

- The recovered data is sent via .CSV files, requiring manual re-upload

- There’s no guarantee that 100% of your data will be recovered

eBook

Protect Your Salesforce Data

Brace yourself for mind-blowing insights on data loss prevention, recovery techniques, and the exclusive features of Sesame Software that automate the backup process.

Featured Resources

Do you have questions about how our tools would work for you? Read below about data warehousing, integration, and more!

How to Back Up Salesforce Data Using Sesame Software

Backing up your Salesforce data isn’t just a precaution—it’s a core part of any resilient data strategy. Whether you're preparing for accidental deletions, data corruption, or compliance audits, having a reliable backup solution can save you time,...

What Rising Platform Prices Mean for Your Data Strategy

Over the past year, we’ve seen a wave of price increases across major platforms in the data ecosystem - especially when it comes to backup tools and cloud storage. Whether it’s a bump in licensing costs or more restrictive storage tiers, many...

Preventing Unauthorized Access: Best Practices for Data Security

In the ever-evolving landscape of cyber threats, unauthorized access remains a significant concern for organizations of all sizes. As a leading provider of data management solutions, Sesame Software is committed to empowering businesses to...

Maximize Your Salesforce Investment Today!